Recently, at VMworld Explore, VMware and AWS announced general availability of the much-anticipated integration of AWS’ FSx NetApp ONTAP NFS shares for use in their VMware Cloud virtualization stack. This article will cover the benefits and considerations so you can see whether it fits with your cloud journey.

I tried to create a title for this post that wasn’t incredibly long, without using a metric ton of acronyms. Forewarning this post is going to be acronym heavy, even more so as usual, since we’re combining technical concepts across three acronym pushing tech companies.

TL;DR

VMware’s stack in AWS, VMC, now supports external datastores from FSx for NetApp ONTAP.

So, what’s going on?

A few years back VMware started to deploy their virtualization stack in AWS. While AWS has a lot of compute functionality through EC2 instances, it’s a much different management methodology than many on-premises customers are used to after years of managing everything through VMware vSphere. There’s also a bevy of networking and security differences that admins need to adjust to as well. And, to top that all off, there’s no direct way to transition between an on-premises VMware environment to a native AWS deployment. There are plenty of 3rd party applications available to make the transition but VMware wanted their user’s experience to be a simplified and consistent as possible.

Thus, VMware Cloud was born. VMC for short.

VMware Cloud on AWS is the official name, I think. It’s hard to see track actual names as marketing departments have the tenancy to change things around frequently. My colleagues and I just call it VMC, or VMware in AWS. This is not to be confused with the VMware stack in Azure, Azure VMware Solution (AVS). Or the stack in Google, Google Cloud VMware Engine (GCVE).

Now the VMC solution is pretty straightforward. It’s essentially vSAN – VMware’s hyperconverged storage and compute stack – running on physical servers in AWS. Simple enough, but it creates a few problems. First, there’s still a bit of a wall between VMware and native cloud services. Secondly, and what we’ll be covering in this article, is that vSAN scales compute and storage together. Each server provisioned comes with CPU, memory, and storage. If you need more compute or storage then you need to provision more servers.

This becomes a problem if you need more storage resources than associated compute. As you scale VMC servers to support that capacity you also scale compute and the associated costs. This can create an imbalance where you’re paying a premium for hardware you don’t need, making VMC a lot more expensive than it would otherwise be.

Enter AWS & NetApp

As VMware developed the VMC solution they knew that hey had to address this scalability concern. They also knew that they needed an AWS native solution to provide consistency of support and service. Along comes AWS’s in-house implementation of NetApp’s Cloud Volumes ONTAP – AWS FSx for NetApp ONTAP. You can read a whole lot more on what that is and how it works here.

Not sure which came first, the chicken or the egg, but it was a match made in “you got your chocolate in my peanut butter” heaven. After a long courting period of hot anticipation, Early and Initial access periods, the solution went full GA just a short while ago.

How FSxN saves the vSAN problem

Simply put, FSxN is an external datastore provider, ala ONTAP presenting datastores on-premises. This allows a VMC admin to scale storage independently in a cost efficient manor. Not only can you stack storage behind FSxN without having to provision additional vSAN nodes but you can benefit from the cost savings for FSxN data in general including deduplication, compression, and automatic tiering of cold data down to S3.

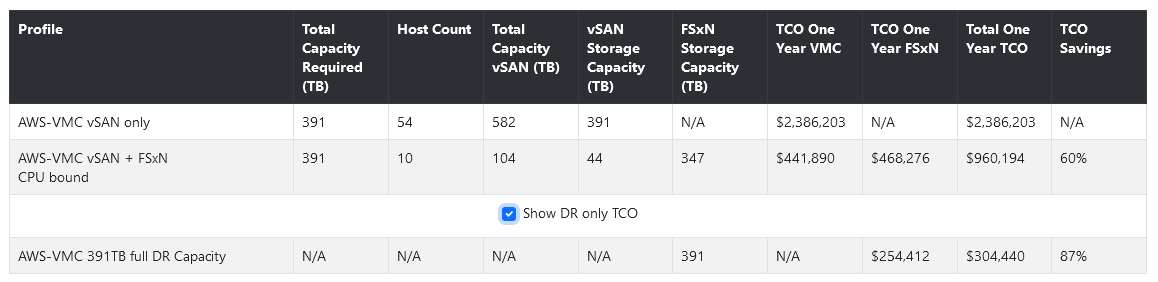

While not every environment stands to benefit, NetApp put together a TCO calculator to identify those who will. I’ve seen customers where it’s essentially “Nah, just keep it in VMC native” and others who can save between 10% and 60%. The best part is that you can upload a rvtools report (still one of the greatest tech gifts to humanity).

I emphasized external datastore provider earlier because you can also use FSxN to present NAS and iSCSI volumes directly at the VM guest level. You can utilize both methods at the same time depending on the best fit for your data’s use case. When having discussions with sales reps, engineers, etc. it’s vital to distinguish which connectivity method you’re referring to. Both have different usage considerations and I keep encountering scenarios where someone’s mind is in a different place and those start to bleed over.

Considerations

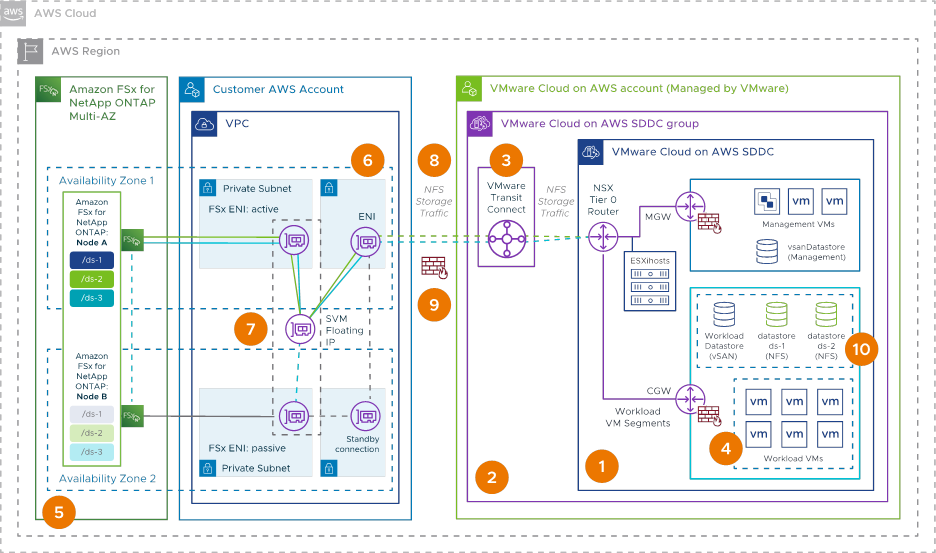

(The best ‘everything’ architecture diagram I’ve found, via NetApp)

(The best ‘everything’ architecture diagram I’ve found, via NetApp)

Networking. Dread it, run from it, networking concerns arrive all the same.

FSxN uses a floating IP address (ENI) to facilitate NFS fail over between VPCs. VMC doesn’t quite know how to handle this natively just yet so data needs to flow over a Transit Gateway. Other terms for the gateway include SDDC Group and vTGW, you know, just to keep things interesting.

This is important because the Transit Gateway is a metered connection. AWS currently charges $.02 per GB of data passed through. If you’re pushing the maximum throughput that FSxN can do – 2 GB/s – you can see how that would add up.

It’s a relatively easy concern to mitigate. When thinking about VM data placement consider which systems are going to push lots of traffic to/from the datastore and think about putting that on the VMC native datastores. The aforementioned TCO calculator has a section for the TG costs as well… buuuuuttttt it’s more about on-going costs. It doesn’t take into consideration initial seeding costs if you’re moving data from VMC datastore to FSx via storage vMotion (SnapMirror from ONTAP to FSxN doesn’t have this issue).

Another consideration is how the networking scales with VMC hosts. Right now AWS limits each host connection to about 500 MB/s. If you have a single FSxN datastore, even though the theoretical limit of FSxN is 2 GB/s, you will only see a fraction of that. If you have a small number of VMC hosts you can mitigate this via creating multiple datastores, each getting its own connection, which then creates more throughput in aggregate. Larger VMC clusters are less at risk since workloads end up getting distributed across the scaling nodes and their datastore connections.

Part of FSxN’s native functionality is the ability to tier cold blocks from the local storage down to S3 to reduce costs. While seemingly rare, I have seen VMFS throw a fit when randomly deciding to fiddle with cold blocks in similar environment on-prem. The consistent and native latencies in a FSxN environment should not be a concern for most workloads. That said I would still recommend setting the tiering setting for critical workloads, such as databases, to none.

It’s also worth noting that VMC doesn’t support single-AZ instances of FSxN just yet. Traditional backup integration and fail over capabilities like you would see in an on-premises VM+ONTAP stack isn’t there yet either.

VMware is working on a way to mitigate these issues with a healthy roadmap that will improve performance and simplicity, making pretty much everything I just wrote moot. As for when, you and I know trying to stick a pin on a date is a fools errand. I will absolutely come back and edit this post as soon as updates come my way.

Deployment

If I had a deeper expense account I’d love to spin up an environment and share a step-by-step guide, but alas. Instead I want to point you to this nifty click through demo. Normally I hate these kinds of things but, all things considered, this is a solid use case. Now they just need a way to skip ahead…

It starts with a standard FSx configuration which is pretty straightforward. Following that is the VMC SDDC configuration then the AWS transit gateway. Then on into the routing, security groups, and back to VMC to mount the datastore. After that the demo goes through a storage vMotion, resizing the volume, then detaching.

There’s also a video from VMware Explore that walks through the process. It’s sped up and doesn’t have any sound, and is on NetApp’s documentation site but not Explore’s video archive for some reason.

Above is my quick attempt to embed the video. Cease and desist in 3, 2, 1…

Additional Reading

- Great video from Tech Field Day covering this solution

- VMware’s VMC and FSxN documentation

- AWS’ VMC and FSxN documentaton

- AWS’ FSxN documentation

- NetApp’s VMC & FSxN TCO Calculator

Page Updates

- 9/19 – Initial Posting