Welcome to the first of what’s likely to be a series of blog articles about Cribl. Don’t know what Cribl is? That’s okay, neither did I a few weeks ago. It’s something I found very interesting and valuable, and those of you who know me personally recognize my need write things down as part of a self education exercise. Gotta do something to make roots in the mind an engineer pulled six ways from Sunday.

So what is Cribl? Well I’m going to cheat and ask what ChatGPT thinks first…

Me >

Tell me about Cribl

ChatGPT >

Cribl is a software company that specializes in observability and data pipeline solutions. It offers a platform called Cribl LogStream, which helps organizations manage and optimize their log data. LogStream enables users to take control of their logs, route them to different destinations, transform them, and enrich them with additional data.

Here are some key features and capabilities of Cribl LogStream:

- Log Data Processing: LogStream allows users to process log data in real-time. It can parse, restructure, and filter logs based on specific criteria, such as timestamp, severity, or message content.

- Log Routing and Delivery: LogStream provides flexible routing options, allowing users to send log data to various destinations, such as Elasticsearch, Splunk, Kafka, or cloud storage services. It supports multiple protocols and formats, ensuring compatibility with different systems.

- Transformation and Enrichment: LogStream enables users to modify log data on the fly. It offers transformation functions to manipulate log content, extract relevant information, or anonymize sensitive data. Additionally, users can enrich log data by adding context from external sources or enrichments created within LogStream.

- Filtering and Sampling: LogStream helps reduce log data volume by applying filters and sampling techniques. Users can define rules to include or exclude specific logs, discard irrelevant events, or downsample data to a manageable level, optimizing storage and processing costs.

- Scalability and Performance: Cribl LogStream is designed for scalability, allowing users to handle large volumes of log data from multiple sources. It offers efficient processing and parallelization capabilities to ensure high-performance data pipelines.

- Visualization and Monitoring: LogStream provides a user-friendly interface for visualizing data pipelines and monitoring log processing in real-time. It offers dashboards and metrics to track the performance of log processing rules and troubleshoot issues.

Cribl LogStream is used by organizations across various industries to gain better insights from their log data, streamline log management, and improve overall observability. It helps reduce complexity, improve data quality, and optimize costs associated with log management and analysis.

As expected, ChatGPT had a pretty good handle on things, but is outdated in a few ways. As of writing this, ChatGPT’s knowledge base only goes up to 2021, so it’s not aware of things like Cribl Edge or Cribl Search. And, as I’m pedantic as ever, it’s not called LogStream anymore, just Stream.

That last line though, Cribl… “helps reduce complexity, improve data quality, and optimize costs associated with log management and analysis,” is pretty bang on.

Data & Observability

Before moving forward I think it’s best to set groundwork with the concept of Observability. “Observability” is one of those IT terms that makes my marketing bullshit senses start tingling. Depending on where you look the meaning shifts. To me observability is, at its simplest, the ability to see real time what’s happening in your environment so you can take action. Doesn’t matter if that data is comes from a hardware, software, or even operating systems.

Sounds a lot like visibility right? Well somewhere in the past few years folks have been trying to differentiate between visibility, somehow deciding that visibility is the technological equivalent of the crappy toilet paper you get in hotels, while observability is the 3-ply ultra plush brand. If visibility is the old and busted, post process, why not call it real time visibility? Or just have a higher expectation of what visibility can mean for your environment? Like I said, marketing bs senses are kicking in.

Data Pipelines

Another area worth having some sort of foundational knowledge about is the data pipeline. It’s something that’s been pretty much omnipresent since the dawn of modern computing and has only grown in complexity and scale since then.

As a concept it’s straightforward. Data is generated by a source and then sent to a destination repository. In the middle there’s usually some sort of data intelligence work taking place, such as analysis to determine what’s happening in an environment or a transformation to better store that data for later.

It’s simple to visualize a data pipeline as A -> B -> C and for a lot of scenarios it is that simple. But it can also get a lot more complex. Many enterprise organizations handle data pipelines which are generated by multiple sources, go to multiple destinations, and go through all sorts of processes along the way. The initial destination usually isn’t the end either. “C” might just be a common stopping point before something chews it up again.

Looking back at ChatGPT, generative AI is almost like a Windows 3D pipes of pipelines. You’ve got pipelines to gather information, pipelines to translate that information in format usable to intelligence generation, pipelines to push and pull data from data lakes, pipelines to feed user prompts into the engine, etc etc.

Much more common pipelines include pulling logs from firewalls, applications, servers, operating systems, and other security systems. Those are then used to assess things like stability and security. The latter is one of the biggest examples these days as every device is logging interactions and data passing through an environment. That can generate TB of data a day in raw formats… and how much of it is actually useful?

In the Cribl space a pipeline is a component of the overall process where Cribl performs functions such as transformations or routing. Sometimes it’s more accurately described as a “Processing Pipeline” but is often simplified just down to “Pipleline.” Cribl heard you liked pipelines so they put pipelines in your pipeline.

Enter “Cribl in the Middle”

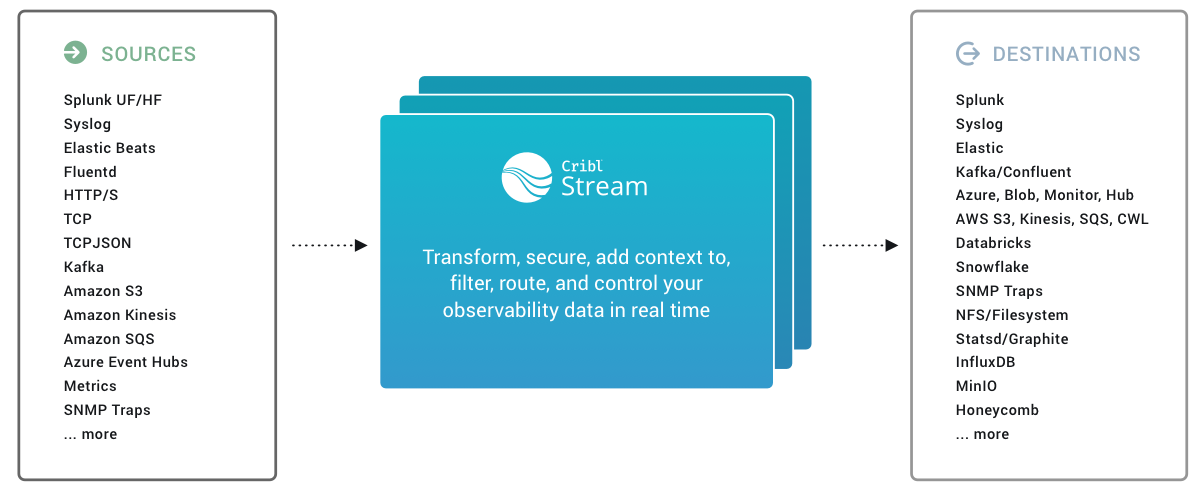

Where Cribl comes into play is the ability to simplify and improve the observability data stream. “Cribl in the Middle” is a great way to explain the platform at a high level, not only because it rhymes, but because it quickly sets an expectation where where it sits in an environment. It doesn’t produce data, nor does it analyze data, but it helps process data for consumption.

Let’s use a firewall as an example. They generate a lot data, not always in the most efficient format. Quite often you want to keep all of it, but how much actually needs to be processed on a day to day basis? A Cribl workflow here would ingest data coming off a firewall, stream all the logs to a low-cost data lake for long-term archiving, and use filtering to push the most relevant data to monitoring/security systems. Combine that with transformative capabilities, for example converting XML to JSON reducing storage and network requirements by 20-40%. To further pound this example home, consider the large number of firewalls that an enterprise may have. All that may feed to something like Splunk with licensing costs based on ingest capacities.

Because Cribl is designed around sitting in the middle of a data pipeline it supports various source and destinations right out of the box. You can also create your own with ways to share and import workflows from other Cribl customers.

Best of all, as someone who’s not really played around in this space, it’s all really simple to use. Okay, sure, you can dive in to where it’s well above my head. But an interface is straightforward and accessible for even a simpleton like me? That’s a big value add.

Cribl has three main packages/products/whatever way you want to describe them. Stream, which is the core application, Edge which is a logging agent, and Search which allows data to be examined before its even processed.

I’m going to cover them briefly below, but the intent is to follow this article with more in-depth looks (someday). This post is long as shit as is. Again, simpleton here, bite-sized chunks for the win.

Cribl Stream

Cribl Stream is the core component of the platform. While not necessarily needed for Edge and Search it’s almost like a keystone to the whole solution stack.

Stream is the pipeline between source and destination with all the juicy value add covered above. Its component constituents include GUI & API, collectors to push and pull data, route and pipeline processing, and environment monitoring.

Stream is typically deployed as a Leader Node alongside multiple Worker Nodes which actually handle the data processing. The Leader and Worker nodes could be collapsed to a single system for small workloads. Stream can also be deployed on-premises, in the cloud, or in a hybrid configuration. For the cloud, I’m pretty sure you can deploy services on cloud compute resources like you would on-prem, or just use Cribl’s SaaS platform. The hybrid configuration is particularly cool because you can have your management in a public cloud, but have workers on-premises that handle all the data workflow, ensuring that no data leaves a particular environment.

One of the nifty things about Stream is that it’s a single bin file whether installing a Leader or Worker node. You can also use the Leader to bootstrap the deployment of Worker nodes. This makes it a lot easier to deploy and deploy at scale.

I also want to point out that Cribl is trying to make things as easy as possible for folks to adopt the platform with a large amount of Cribl and community created modules to simplify common use cases like routing and data reduction. You can check a lot of these out in the Cribl Packs Dispensary.

Cribl Edge

Cribl Edge is somewhat confusing at first glance since Cribl uses a lot of the same marketing info from Stream just copy-pasted over. For example the “Stream – Visualized” diagram is the exact same one used for “Edge – Visualized.” Just because you have those components in a simple diagram doesn’t mean you can just reuse said diagram for every labeled component without a modicum of context.

Edge is basically a data gathering agent that you can deploy on Windows, Linux, Kubernetes, and Docker containers. If your environment doesn’t already have edge data collection abilities Edge provides that functionality. You can also use it to grab data from Splunk, Elasticsearch, and more.

It’s not just grab logs and ship ‘em off either. A lot of the intelligence that you can leverage with Stream can also apply at the edge, like dynamic sampling, statistic aggregation, parsing and masking.

Cribl Search

Search, as you might have guessed by the name, provides the ability to search through all that observability data you gathered. Let’s say your workflow consists of sending some data to Splunk, but the rest out to a data lake somewhere. Well if you need something that went into the data lake, but don’t want to ingest it all, you can use search to look for the specific datasets.

Cribl’s Documentation

I just want to take a few moments to praise the team behind Cribl’s documentation. It’s properly accessible, easy to read and not hidden behind a log-in screen or PDF somewhere. That, along with their readily available sandbox, makes learning the platform a lot easier than some other tech companies make it.

Pricing

There are two paid editions of Cribl, a Standard and Enterprise. Standard has various limitations, like 1 Worker Group, 5/TB of data a day of processing, and 8×5 support. Enterprise uncaps everything – unlimited worker groups and unlimited data processing, while also adding 24×7 support and RBAC functionality.

Standard and Enterprise also have individual billing rates for the amount of data ingestion, and if you use the Cribl Cloud, different pricing for worker node instances.

Ah, but I’m burying the lede here… there’s a free tier! Up to 1TB/day you can kick the wheels on pretty much everything but RBAC. Great for small POC and lab environments.

All this is subject to change on short notice, so make sure to check out their official pricing page for the latest info. But in my experience your best bet is to talk to a Cribl partner for all the details and proper pricing.

In Conclusion

To put it frankly, I think Cribl is some real cool shit. I wouldn’t have written this long ass beginners introduction if I didn’t think it was valuable to at least one person. Having worked in the data space for nearly two decades I’ve seen everyone shout from the treetops about how data generation is always growing exponentially… And they’ve been right. Between the simplicity of virtualized compute scaling, the ever networked world, and ever importance of security and logging, not only are capacity requirements growing but so is the volume and complexity. Something like Cribl can help manage that growth, putting it in the right place and helping save money along the way.

Additional Resources

- Gartner – Monetizing Observable Data Will Separate the Winners and Losers

- Cribl Observability Pipeline for Dummies

- Cribl Documentation

- Cribl Sandbox

Post History

- July 10, 2023 – Initial posting,

rudamenteryrudimentary proofreading - July 17, 2023 – Someone finally pointed out a typo